|

Phase estimation in single-channel speech enhancement and separation |

|

Signal Processing and Speech Communication (SPSC) Lab |

|

Phase estimation in single-channel speech separation/enhancement |

|

Recovering a target speech signal from a single-channel recording falls into two groups of methods: 1) single-channel speech separation, and 2) single-channel speech enhancement algorithms. While there have been some success in either of the groups, all of them frequently ignore the issue of phase estimation while reconstructing the enhanced or separated output signal. Instead, they directly pass the mixed signal phase for reconstructing the output signal. The use of noisy or mixture phase can result in perceptual artifacts, musical noise and cross-talk phenomena in speech enhancement and speech separation scenarios, respectively (see [3-5] for some references on single-channel speech separation methods). To address the phase impacts on single-channel speech algorithms, we recently addressed the importance of the phase information in signal reconstruction and the parameter estimation stage. In [2], we recently showed that replacing the mixture/noisy phase with an estimated one can lead to considerable improvement in the perceived signal quality in single-channel speech separation. Furthermore, the phase plays role in the parameter estimation stage of single-channel speech enhancement and separation. We recently reported the improvement in the separation performance by including the phase information versus previous phase-independent methods [1].

[1] Pejman Mowlaee, Rainer Martin, “On Phase Importance in Parameter Estimation for Single-channel Source Separation”, Int. Workshop Acoust. Signal Enhancement (IWAENC), Aachen, Germany, Sep. 2012. [2] Pejman Mowlaee, Rahim Saeidi, Rainer Martin, “Phase Estimation for Signal Reconstruction in Single-channel Source Separation”, in Proc. 13th Annual Conference of the Int. Speech Communication Association (INTERSPEECH), Portland, USA, Sep. 2012. [3] Pejman Mowlaee, “New Strategies for Single-channel Speech Separation”, PhD thesis, Institute for Electronic System, Aalborg University, Aalborg, Denmark, 2010. [4] Pejman Mowlaee, Mads Christensen, S. H. Jensen, “New Results on Single-channel Speech Separation Using Sinusoidal Modeling”, IEEE Trans. Audio, Speech, and Language Process., vol. 19, no. 5, pp. 1265-1277, 2011. [5] Pejman Mowlaee, Rahim Saeidi, Mads Christensen, Zheng-Hua Tan, Tomi Kinnunen, Pasi Franti, S. H. Jensen, “A Joint Approach for Single-channel Speaker Identification and Speech Separation”, IEEE Trans. Audio, Speech, and Language Process., vol. 20, no. 9, pp. 2586-2601, 2012. |

|

Bachelor/Master Theses |

|

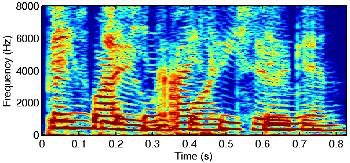

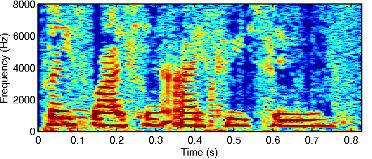

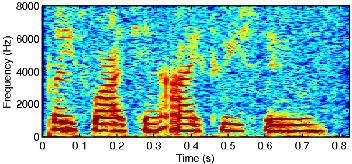

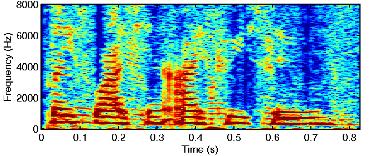

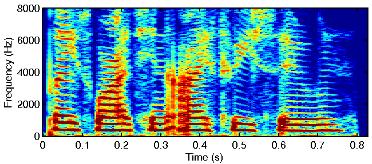

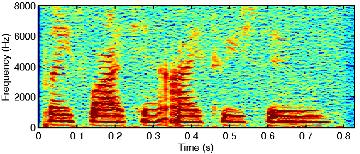

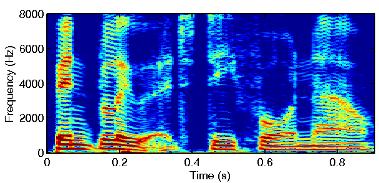

Example 1: Speech Separation

The state-of-the-art single-channel speech separation techniques lead to some extent of separated signals. Our proposed phase estimation approach is capable of further reducing the cross-talk remaining by the previous methods caused by employing the mixture phase. The following examples represent the impact of phase information for two scenarios: 1) Clean magnitude spectra: where a priori information about the magnitude signal spectra are provided (ideal scenario). 2) Quantized magnitude spectra: where the magnitude spectra are selected from quantizer (realistic scenario)

|

|

1) Clean Target Speech Signal Clean Phase |

|

2) Speech Mixture of Two Speakers Mixture Phase |

|

3) Ideal Binary Mask Separated Signals with Mixture Phase |

|

4) Separated Signal Using Proposed Method Phase Estimated |

|

5) Quantized Magnitude Spectrum with Mixture Phase |

|

6) Benchmark Method Using MISI Phase Estimated |

|

The proposed phase estimation algorithm lead to further removal of the residual competing (male speaker) in the separated female speech while the separation methods using mixture phase have traces from the interfering signal. Therefore, our proposed phase estimation approach is capable of further improved separation performance by filtering more of the interference. We conclude that by employing the phase information, it is possible to further push the limits of the state-of-the-art single-channel source separation or speech enhancement methods. ,

|

|

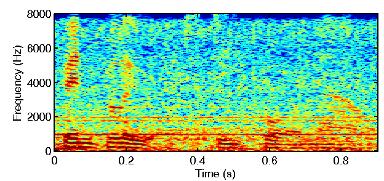

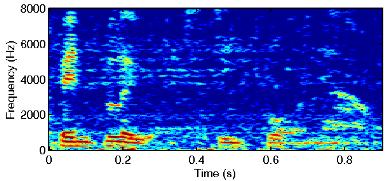

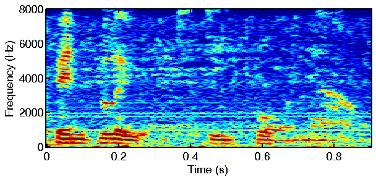

Example 2: Single-channel Speech Enhancement

Previous single-channel speech enhancement methods focus on estimating optimal filters in magnitude domain spectrum to enhance the noisy magnitude spectrum. They directly use the observed noisy phase spectrum when reconstructing their enhanced output signals. The following experiments show the effectiveness of the proposed phase estimation algorithm in terms of improving the perceived signal quality of the enhanced speech signal. We consider two scenario: 1) Both speech and noise spectra are known a priori (ideal a priori SNR) 2) Noise is known but speech estimated using state-of-the-art enhancement algorithm (IMCRA) 3) Blind scenario, both speech and noise are estimated by the enhancement algorithm.

|

|

1) Clean speech signal |

|

3) enhanced speech signal using noisy phase |

|

4) enhanced speech signal (scenario 3) |

|

By employing the phase estimation algorithm, it is possible to further improve the perceived signal quality of the noise corrupted speech signal. Therefore, we believe that the idea of enhancing the phase spectrum of the noisy speech signal can further push the limit of the current single-channel speech enhancement algorithms.

|

|

2) Noisy speech signal |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|